In listing the Common Core State Standards’ three shifts, the authors define “Rigor” by stating that:

“rigor refers to deep, authentic command of mathematical concepts, not making math harder or introducing topics at earlier grades. To help students meet the standards, educators will need to pursue, with equal intensity, three aspects of rigor in the major work of each grade: conceptual understanding, procedural skills and fluency, and application.”

Clearly we want all students to have a “deep, authentic command of mathematical concepts” and a balanced set of procedural skills and fluency, conceptual understanding, and application. So my question is, do we know what that looks like when we see it?

Consider standard 4.MD.3 which states:

Apply the area and perimeter formulas for rectangles in real world and mathematical problems.

To assess whether students have “deep, authentic command” of this standard, I began by interviewing eighth grade students and asked them:

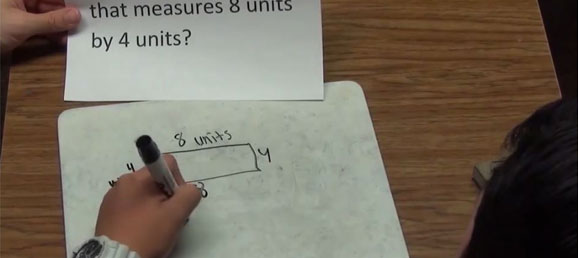

“What is the perimeter of a rectangle that measures 8 units by 4 units?”

Let’s watch how Student #1 responded and see if he demonstrated procedural skills and fluency and conceptual understanding (not application since this is not an application problem).

- Procedural skills and fluency? Check. He knows how to figure out the perimeter of the rectangle.

- Conceptual understanding? Check. He understands that the perimeter is the sum of the rectangle’s sides lengths.

This shouldn’t seem too surprising of a result. After all, he is an 8th grader getting asked about a fourth grade standard. I decided to follow up by having him do a different problem on the same standard:

“List the dimensions of a rectangle with a perimeter of 24 units.”

Does this problem seem remarkably different to you than the other problem? Let’s see how he answers it:

- Procedural skills and fluency? Nope.

- Conceptual understanding? Nope.

I hope you are wondering what the heck just happened. Is it possible that we have students like him in our classes that initially appear to have a “deep, authentic command” but actually have superficial understandings? What was so different about the first question that allowed the student to appear to demonstrate procedural skill and fluency as well as conceptual understanding?

The answer? Depth of knowledge

Unfortunately, this is not an isolated incident. Let’s watch how Student #2 responded to the same two questions.

- Procedural skills and fluency? Check. She knows how to figure out the perimeter of the rectangle.

- Conceptual understanding? Check. Though she didn’t explain herself like Student #1, she appears to understand that the perimeter is the sum of the rectangle’s sides lengths.

Now for the second question:

- Procedural skills and fluency? Nope.

- Conceptual understanding? Nope.

Again, we have a student who initially appears to have procedural skills and fluency as well as conceptual understanding. However when we push just a little bit deeper, it is clear that her foundation is not solid.

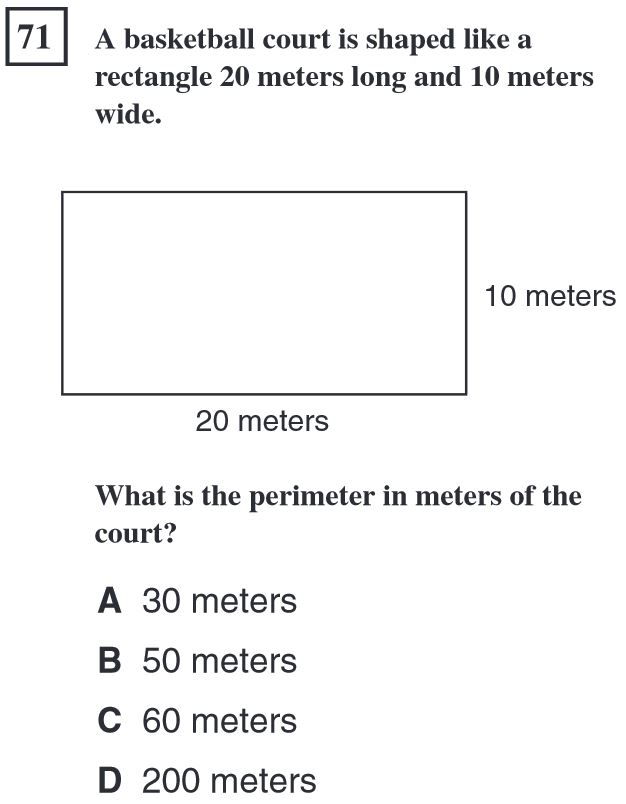

Consider the way this standard has been traditionally assessed on a state’s multiple choice end-of-year summative assessments. The problem below comes from California’s outgoing assessment.

I imagine that both students would have correctly answered this question, which would have given us a false positive measure regarding their mathematical understanding.

Now let’s look at how Student #3 answers these first two questions:

- Procedural skills and fluency? Check. He knows how to figure out the perimeter of the rectangle.

- Conceptual understanding? Check. He understands that the perimeter is the sum of the rectangle’s sides lengths.

- Procedural skills and fluency? Check. He knows how to figure out the perimeter of the rectangle.

- Conceptual understanding? Check. He understands that the perimeter is the sum of the rectangle’s sides lengths.

Finally we have a student who has demonstrated “deep, authentic command” of this standard. Or has he? I decided to ask him a third question:

“Of all the rectangles with a perimeter of 24 units, which one has the most area?”

- Procedural skills and fluency? Nope.

- Conceptual understanding? Nope.

Again, another student who appeared to demonstrate “deep, authentic command of mathematical concepts” wasn’t able to show it when a slightly different problem was given to him. What do we make of all of this? What exactly is causing this and where do we go from here?

The first step is clearly defining the problem so that we are all on the same page:

- Students appear to demonstrate “deep, authentic command of mathematical concepts” when given commonly used problems.

- However with more challenging problems, the same students seem to no longer demonstrate that command. In this particular case, the first problem was DOK 1, the second problem was DOK 2, and the third problem was DOK 3. Clearly that increase in depth of knowledge made a big difference.

Next we need a plan to tackle this problem:

- Educators must have a clear understanding about why these problems are different from one another. I have created a Tool to Distinguish Between Depth of Knowledge Levels, with examples ranging from 1st grade through high school that will be very useful at helping educators see the differences between the levels.

- Next, we need to practice implementing these problems such that all students are engaged in a problem that is at the right challenge level for them. I am not saying it is going to be easy. In fact it is going to be a challenge for students and teachers alike. Many students are not initially willing to persevere through the problem solving process. That being said, I hope these videos have convinced you that this is a fight worth fighting. This is how I have been implementing depth of knowledge and I am currently working on some new ideas that I plan to blog about in the future.

- Last, we need a source that can provide us with a variety of free problems at depth of knowledge 2 and 3. Check out Open Middle. There are currently over 150 problems on the site from kindergarten through high school. The key to this site’s success is that is by the people, for the people. Almost half the problems come from people I have never met in person. If you have an idea for a problem, submit it here. Imagine if one teacher at each school submitted a problem…

Let’s get people talking about this. The more math educators are aware of this issue, the better equipped they will be at addressing it. To help in this cause, I am providing the student videos for you to download and use with your colleagues.

I think you’ve featured a very valuable area for teacher to focus on. Your video examples and questions really help identify student misconceptions AND teacher misconceptions, or as you accurately put it, “false positives.” Thanks for sharing this.

So we can encourage teachers to work on creating or finding (at open middle) these types of questions to use with their students. However, I believe teachers also need support with strategies for asking students follow-up questions to give the teachers a better understanding of the misconceptions.

Here are my suggestions:

With question two: Delete the word “the” before “dimensions so it reads, “List dimensions of a rectangle with a perimeter of 24 units.”

This question allows greater flexibility in student thinking and removes the emphasis that there is just one answer. I’m curious to know if this would have impacted the results.

Selfishly, I wanted more follow-up with these students:

Student 1 (part 2): Explain why you put the 24 units up here.

Student 2 (part 2): Explain to me what “large” means.

Student 3 (part 2): How did you initially come up with 10 and 2 as the dimensions? Walk me through your thinking.

Student 3 (part 3): Tell me more about what you mean by “top.” When you say “top” what are you answering in this question?

Thanks for all your hard work and contributions. I hope that we teachers continue to explore questions that challenge the DOK levels in addition to asking our students follow-up questions that give us a bigger window into their thinking.

Andrew, thanks for your comments. I agree with pretty much everything you said. With 20/20 hindsight vision, I wish I had reworded the questions slightly to lesson the chance that the problems’ wording was the cause of the problems.

Also, I definitely wished I had asked additional follow up questions to the students? That being said, that is the great thing about data in general. The role of good data, in pretty much every form, is to answer simpler questions and lead you to wondering about more complex questions… which then requires more data to answer. It is a worthwhile cycle.

Thanks for continuing to push me.

Undoing student understanding is critical here. Many times we assess students in the exact same manner in which concepts and ideas are learned. I really like this idea of “false positives” because once we accept they exist, we can begin to examine why they happen.

Many times we pigeonhole our students’ learning to only one method of “conceptual” understanding. If we strive to teach beyond DOK 2, we open the conceptual door of understanding through exploration of numbers. Students that see how numbers come together are able to make the connections and identify relationships because they have built their own understanding. The end result…it doesn’t matter what DOK level question is asked.

If we want to improve instruction it all comes back to how we assess. I’m loving this post and will definitely be sharing.

Thanks as always Graham. I appreciate your feedback and especially agree with, “Students that see how numbers come together are able to make the connections and identify relationships because they have built their own understanding.”

Also, your Open Middle problems (http://www.openmiddle.com/tag/graham-fletcher/) have been really helpful. Thanks.

Your message here is powerful. I showed an English teacher these videos and we discussed the implications of how that they might help us to develop instruction in our school district. With your permission, we would love to use your videos in a professional learning exercise. Thank you!

By all means Kari, you are welcome to use them. All the content on my site is licensed under CC BY-NC-SA 4.0. You can read about what that entails here: http://creativecommons.org/licenses/by-nc-sa/4.0/deed.en_US.

I believe MEP Maths curriculum addresses this problem. However it is crucial a child starts young on this, from Reception or Year 1 of the curriculum. Because algebraic concepts are already introduced at that stage, and it is possible to teach a child that. In UK Reception age is about 5 years old, Year 1 age is about 6 years old. Now I’m just speaking from experience as a Maths tutor. I have had the most success using MEP for kids from that age onwards. I’ve tried using MEP for a kid who started at Year 5 of the curriculum even though she was 12 when she started (so should be at Year 7) but I could see there was no way she could handle the Year 7 or even Year 6 stuff. She found Year 5 such a stryggle. She did not have a good depth of understanding of Maths concepts. She simply is someone who has been copying Maths problem solving methods. Give her something out of the norm of what she has seen and she is stumped even though no new Math concepts are required to solve this. For her to start from Year 1 would be a big investment of time though and it is not practical for her to do that, but her gaps in knowledge in Maths is apparent. I feel however she would be able to pass her Maths GCSE satisfactorily if she practices a wide range of questions leading up to it. I can only hope the depth of understanding will dawn on her through varied exposure to different ways of solving problems.

I speak as someone who was not great at conceptual Maths, although was a straight A Maths student in high school, until I completed a degree in Philosophy at Uni, and then went back to night college to do A Level maths. At that point I found my understanding of Maths had improved greatly over time, but I attribute a lot of that to a more logical way of thinking trained into me during my time studying Philosophy. Not everyone will follow in my footsteps, and it took a long time, but I believe a lot of the problema associated with depth of understanding in Maths has to do with how a person has learnt to think and analyse problems. It doesn’t come naturally for some people but those people can be trained to think like that. But not many schools train people to think this way. IMO philosophy should be a mandated subject in schools, but it isn’t at the moment. Maybe only in France, so I’ve heard.

Thanks Rach. Comments like this remind me of how much I still have to learn about how different people are teaching math differently. I appreciate you sharing your views.

Robert,

This is so so true when it comes to teachers and assessment! How often are teachers assessing for ‘right’ answers rather than depth of knowledge. I also work with teachers and in my online classroom the most recent Module I am working on currently is how to identify if students have the procedural skills, fluency , conceptual understanding and the ability to apply.

We often categorize those that get the right answers as the high achievers but when these students are asked, tell me why or how you know, they can’t!

Wonderful article and I will be sharing these videos!! Thanks for sharing.

Thanks Darlene. It certainly can be challenging to distinguish between students who “get the right answers” but can’t ” tell me why or how you know.” That being said, it certainly is essential. Glad you liked it.

If you take those students who could not “List the dimensions of a rectangle with a perimeter of 24 units.” and showed them how to do it *once*, then many would then often be able to do it forever afterwards. Because the problem they have is not lack of understanding of the mathematical concepts in Measurement, but a confusion because they don’t get the question. They know exactly what a perimeter is, but they are being asked effectively an Algebra question (how is one variable related to another variable) in the guise of a Measurement question — and that throws them.

If having been shown once they can’t relate that to the next example, then I’ll grant that they show a lack of conceptual understanding. But of Algebra, not Measurement.

For me there is no “deep” understanding of perimeter. It is the distance around an object, period. There simply is nothing else to understand. Yes, circle perimeters are harder than rectangles — because there’s a formula to fight through that many find difficult, not because they don’t conceptually understand what is being asked.

I know when a student really doesn’t understand area, because they attempt to answer an area question using perimeter or such like. (And that’s quite rare, because area is such a simple concept.) I don’t think a student doesn’t understand what area is, conceptually, because they can’t figure out what shape of rectangle gives the biggest area. That’s a classic maximisation question and I use it all the time — in Calculus, Algebra and Graphing. But not Measurement.

If I want to test a student’s conceptual understanding of area, then I do it via complex shapes. Can they divide an L shape into two rectangular components and add them together? At the next level: can they subtract a circle cut out of a square to get the area remaining? Those are area questions, not disguised Algebra.

Hi Chester. I challenge you to test your assumption.

When you say “If you take those students who could not ‘List the dimensions of a rectangle with a perimeter of 24 units.’ and showed them how to do it *once*, then many would then often be able to do it forever afterwards.” what evidence do you have that this is true?

It has been my experience that the idea that we should show students math in every conceivable format that they might experience just makes better robots, not problem solvers. Perhaps this question you’re not a fan of, but I’d check out others on openmiddle.com and try them with your students to see more about what they know and don’t know.

Robert, I’m not suggesting we show them every situation. But we do need to distinguish algebra questions from measurement ones.