One of the most common concerns about implementing 3-Act Tasks (also known as problem-based lessons, application problems, or math modeling problems) is “How do you assess them?” Here are the four ways I use most often:

You don’t grade every single classwork assignment, do you? So, similarly you shouldn’t feel compelled to have to grade these types of problems either. While it may seem obvious, some teachers avoid using these problem because they feel like it will be too time consuming to assess them later. If that’s what’s stopping you, then just skip the grading part because not using them at all is even worse.

This is the most commonly used option and is relatively easy to implement if you need to get through a pile of 180 papers like secondary math teachers often do. The rubric originally came from the Smarter Balanced Assessment Consortium (SBAC) and consists of one point for a correct answer and one point for sufficient reasoning to justify the answer. Since I use this Problem Solving Framework when I do problem-based lessons, I just grade the “conclusion” section where students must write down their answer and explain how they got it.

One realistic concern with this rubric is that sometimes it’s hard to choose between assigning one point or zero points for a student’s explanation. So, this rubric can be easily modified to go from 0 to 5 points for correct answer and 0 to 5 points for sufficient reasoning to justify the answer. In that case, a student who gets the correct answer but whose reasoning is just OK might get a 5 for a correct answer but 2 points for his reasoning. Another issue with this method is that different teachers might give the same student different scores. This is a big concern if the problem is being used for a common assessment and leads to a need for Option #3.

This rubric is time consuming to create and use but is essential when consistent scoring is required. The process begins by determining what aspects of the problem solving process are worth assessing. This may include criteria such as:

- Getting the correct answer

- Using the correct units

- Explains the formula used

- Writing as a narrative with complete sentences

This rubric is something of a hybrid between a general purpose rubric and a problem-specific rubric in that it applies to virtually all problems but it also gives you more specific information. It is called a practice rubric because you are evaluating students’ use of the Common Core State Standards’ Practice Standards. So, some of the criteria might be:

- Monitored and evaluated progress and changed course if necessary. (MP 1)

- Checked their answers to problem using a different method. (MP 1)

- Explained correspondences between equations, verbal descriptions, tables, and graphs. (MP 1)

- Justified conclusions, communicated them to others, and responded to the arguments of others. (MP 3)

- Stated the meaning of the symbols chosen. (MP 6)

- Carefully specified units of measure. (MP 6)

- Calculated accurately, efficiently, and expressed numerical answers with a degree of precision appropriate for the problem context. (MP 6)

If you liked those criteria and want to see a much longer list you can pick from, then check this out.

To make this more concrete, I will implement the three rubric options on three students’ responses to my sinkhole problem.

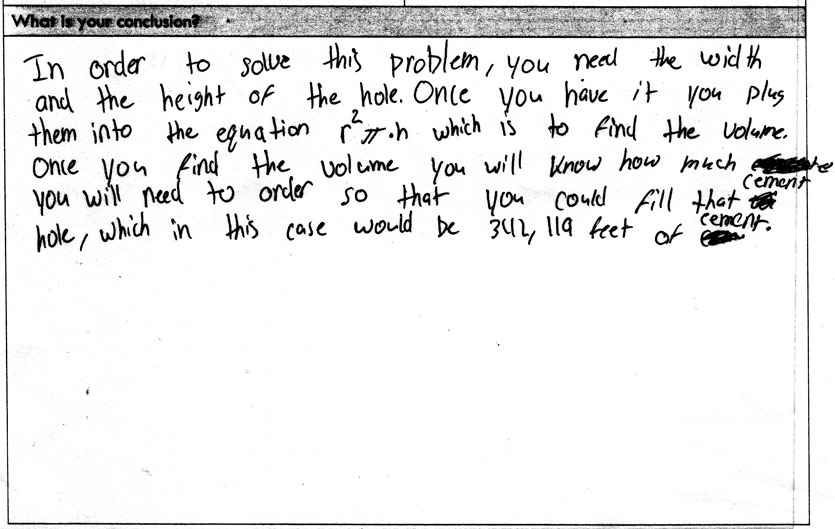

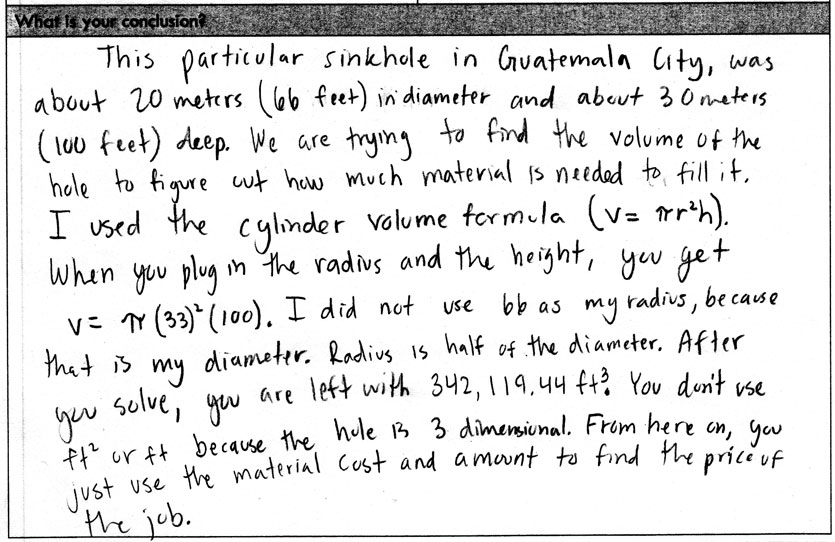

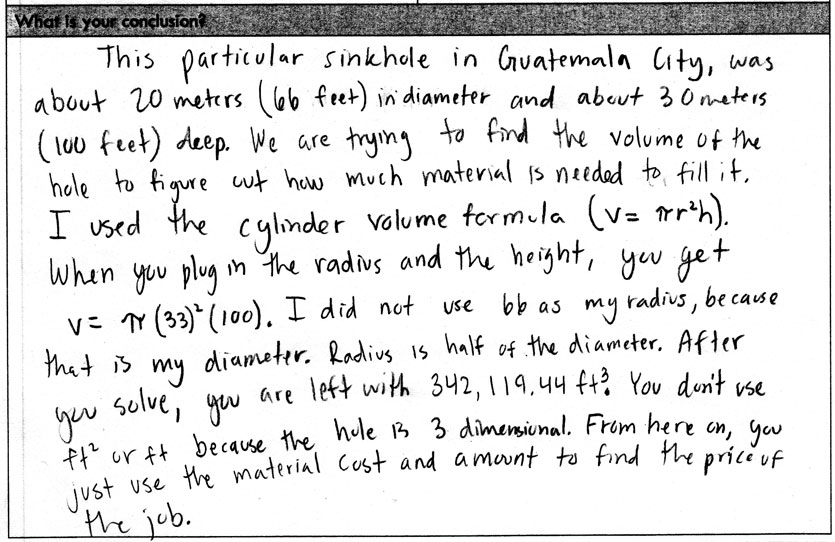

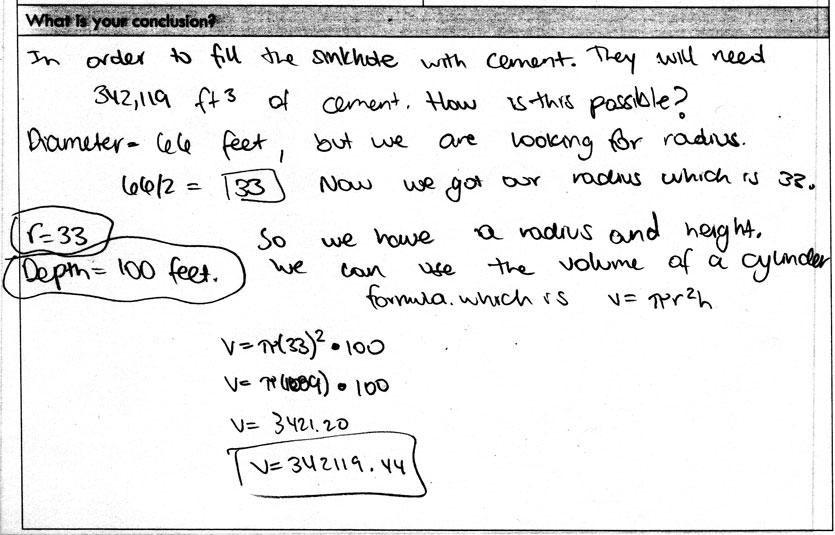

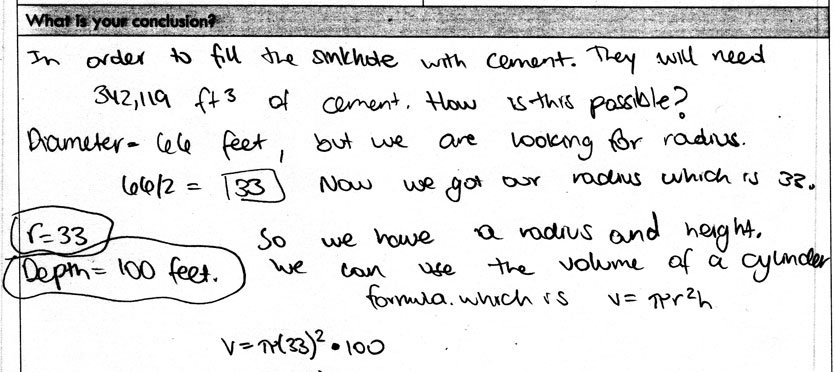

You can immediately see the problems with assigning 1 or 0 points with this first student. This student stated 342,119 feet when it should be cubic feet. I could make a case for 0, 0.5, or 1 point for correct answer. Let’s say 1 point though. Next comes the reasoning point. I would have wanted to read more about the process the student used. For example you don’t plug the width (diameter) of the hole into the formula. You plug half the width (radius) so I would give them a 0 for sufficient reasoning. So, if it was me I would give the student 1 point total.

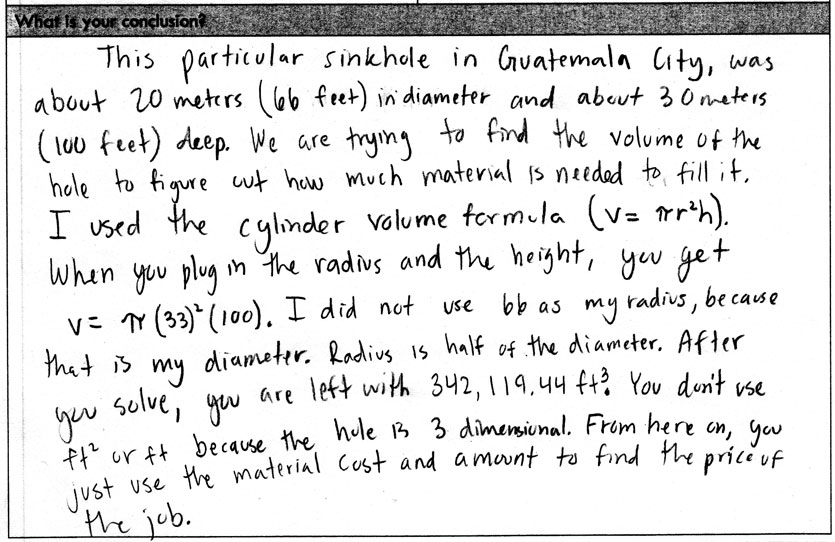

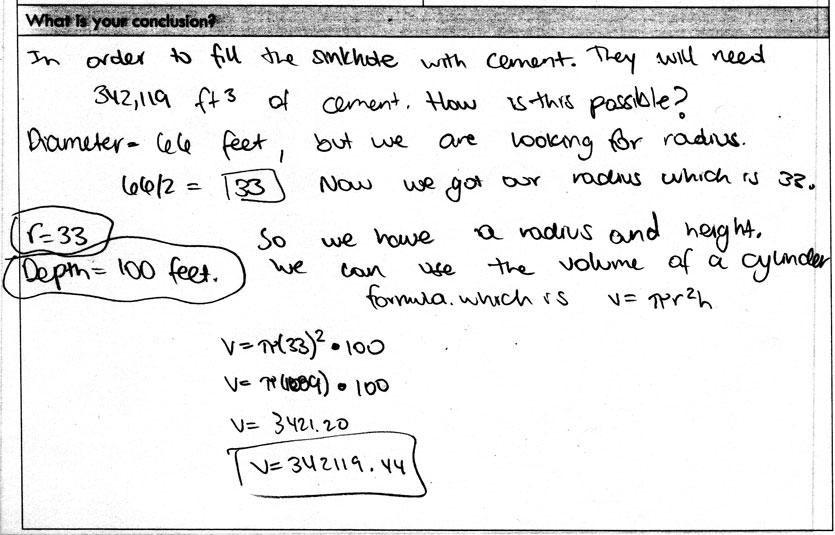

This student is easier to grade. The answer has the correct value and units, so 1 point for that. The reasoning is sufficient as well so another 1 point for that giving a total of 2 points.

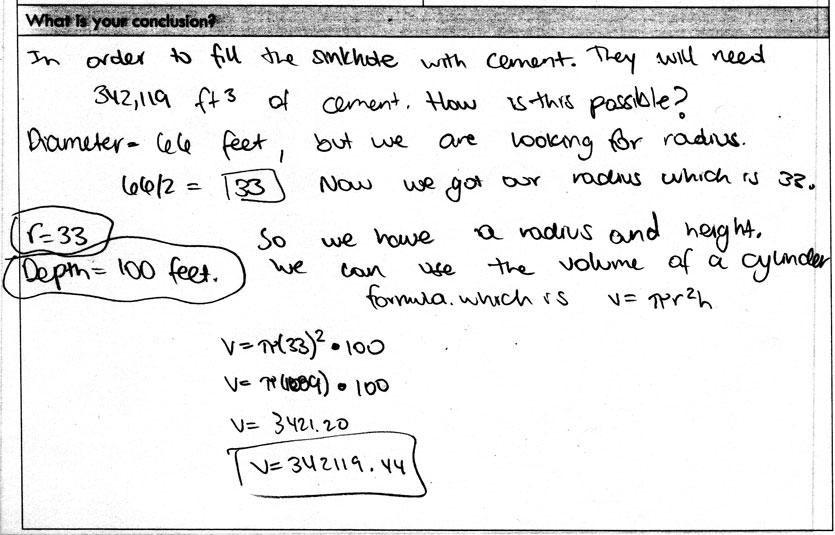

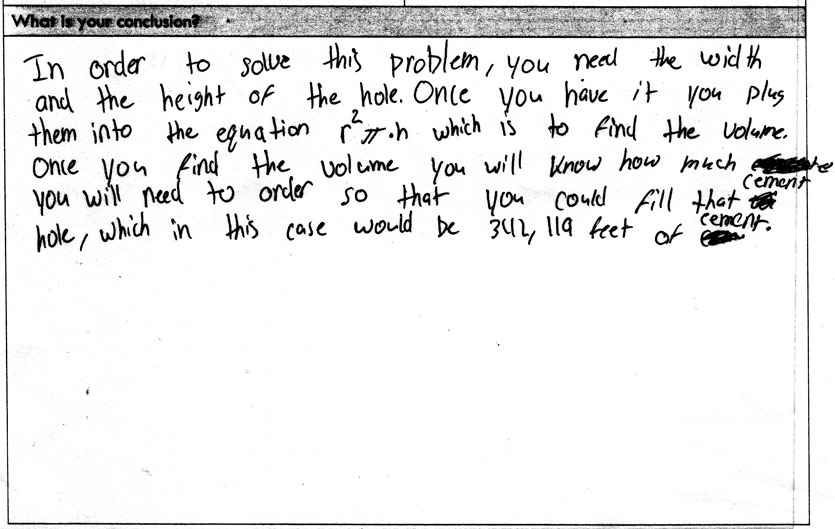

Like the second student this student earns 1 point for a correct answer. I’d also give the student 1 point for sufficient reasoning for a total of 2 points.

Let’s assume that we want to assess the four criteria listed below. Next I have to assign point values to each criteria. Obviously determining these point values will depend on the people making the rubric. For this example I will use:

- Getting the correct answer – 3 points

- Using the correct units – 1 point

- Explains that the radius is half of the diameter – 2 points

- Writing as a narrative with complete sentences – 2 points

Let’s revisit the first student now using this rubric:

- Getting the correct answer – 2 points

- Using the correct units – 0 point

- Explains that the radius is half of the diameter – 0 points

- Writing as a narrative with complete sentences – 2 points

He didn’t quite get the right answer because of the units so I took a point off of “Getting the correct answer” and another point off of “Using the correct units”. He didn’t explain anything about the radius so he lost two points there as well. 4 points total.

Next is the second student:

- Getting the correct answer – 3 points

- Using the correct units – 1 point

- Explains that the radius is half of the diameter – 2 points

- Writing as a narrative with complete sentences – 2 points

This student included every aspect I was looking for and earns all 8 points.

Finally we have the third student:

- Getting the correct answer – 3 points

- Using the correct units – 1 point

- Explains that the radius is half of the diameter – 2 points

- Writing as a narrative with complete sentences – 1 points

This was a tough one. I took off one point for writing the conclusion as a narrative but I could see assigning full points too. Obviously calibration would be critical to determine exactly what it would take to earn each point level.

Let’s assume that we want to assess the four criteria listed below. You might pick these if they were the things you valued and wanted students to do more of. Next I have to assign point values to each criteria. Obviously determining these point values will depend on the people making the rubric. For this example I will use:

- Student explains how equations, words, pictures, and/or symbols are connected. – 2 points

- Student does not just state steps taken, but convinces reader that the steps they took are a correct way to approach problem. – 2 points

- Student carefully specifies units of measureand uses it consistently in conclusion. – 1 points

- Student accurately calculates a numerical value for the answer. – 3 points

Let’s revisit the first student now using this rubric:

- Student explains how equations, words, pictures, and/or symbols are connected. – 0 points

- Student does not just state steps taken, but convinces reader that the steps they took are a correct way to approach problem. – 0 points

- Student carefully specifies units of measureand uses it consistently in conclusion. – 0 points

- Student accurately calculates a numerical value for the answer. – 3 points

This student did not do a good job connecting the context with the math content so he get 0 points for explaining how they are connected. He somewhat justified his conclusions and lost a point for not using the correct units. He did calculate accurately so he got full points for that. 4 points total.

Next is the second student:

- Student explains how equations, words, pictures, and/or symbols are connected. – 1 points

- Student does not just state steps taken, but convinces reader that the steps they took are a correct way to approach problem. – 2 points

- Student carefully specifies units of measureand uses it consistently in conclusion. – 1 points

- Student accurately calculates a numerical value for the answer. – 3 points

This student did a good but not perfect job of explaining how his equation connected to the context so I took off a point for that. I wouldn’t argue too hard against full points here though. She justified her conclusion well for another 2 points. She used the correct units (cubic feet) and explained why. Finally she calculated accurately. 7 points total.

Finally we have the third student:

- Student explains how equations, words, pictures, and/or symbols are connected. – 0 points

- Student does not just state steps taken, but convinces reader that the steps they took are a correct way to approach problem. – 1 points

- Student carefully specifies units of measureand uses it consistently in conclusion. – 0 points

- Student accurately calculates a numerical value for the answer. – 3 points

This student’s conclusion is light on a narrative that explains how anything is connected. There isn’t much of a convincing argument either. So, 0 points and point for the first two criteria. No units are specified, so 0 points there. Finally she calculated accurately. 4 points total.

A couple of final notes:

- With any rubric being used as a common assessment, you’ll need to go through a calibration process. A team of teachers that plan to use the rubric should find exemplars that show what it would take to earn each of the scores for each category. Clearly this process will take time, but once every grader is on the same page, the scores are much more likely to be comparable and reliable.

- Remember that you can always mix it up and have students check out the rubric and/or evaluate themselves. For example, if you showed them the rubric after they solved the problem but before they explain their reasoning, that would send them in the right direction. Alternatively, you could show it to them after they are completely done, and this would give them a chance to make adjustments.

I hope that at least one of these assessment methods resonated with you. What do you agree with? Where have I missed the mark? Are there any other options for assessing 3-Act Tasks that I missed? Please let me know in the comments.

Is there any scientific evidence about what is the “best” way to assess? By “scientific evidence” I mean something like this [https://ies.ed.gov/ncee/wwc/FWW]. I think any teacher could make their own assessment but we need to know what is supported by studies.

Hi Xavier. Honestly, I’m not sure. For better and for worse, much of what I share is based on personal experiences and not on scientific evidence.

Thanks, Robert, for sharing this with us. I appointed to that because, as a teacher, you hear very much about education and you don’t know who do you should pay attention. In general, we miss this much scientific evidence. Thanks for receiving my comment so well

I saw your link. I chose at random one activity. Scientific evidence stated that there is no significative difference between applying it or not. I also read Dan Meyer’s doctorate dissertation, with the same conclusion. I think the “best” educational practices are the ones that make knowledge and interest in learning last in the students minds (long term). I find very hard to measure new stuff effects in long term.

Besides, my experience (which I will try to justify scientifically) says that what works best for one teacher, one group, some students… may not be the best for other ones.

In my opinion, students should meet a variety of teachers, lesson plans, assessment methods…

What drives your use of points?

I’m trying to move away from points as much as possible as they invariably become the purpose of the exercise for students. Currently, with a 3-act I’m looking for: 1.) Does the student show evidence of a conceptual understanding to a level that they can work from the starting point to a resolution? 2.) Does the student have supporting work (mechanics) that is error free (or properly amended)? 3.) Can the student communicate and defend his or her conclusion using mathematical reasoning? At no point does my evaluation of a student for the task go into the gradebook. It serves as fodder for dialogue with the student.

For the class in general I assign a mastery level (I use an Alpha through Zeta scale – with each corresponding to some description of student readiness) on a learning target-by-learning target basis. It is admittedly susceptible to some measure of subjectivity, but I like to think I can do a reasonable enough job assigning an honest mastery level.

Hi Will. I appreciate your thoughtfulness. I know what you mean in terms of grades being the extrinsic motivation over the intrinsic motivation of completing a task because it’s valuable. I also don’t want them to shut down when they see the grades, as opposed to reflecting on how they can improve.

In some ways, while not technically points, a mastery level shares similarities in that it is trying to help measure progress. It sounds like you are using these problems formatively, and that is great. However some people use them summatively as well (think end of year standardized assessments) so it is worth having a conversation about how to assess them. I’m not saying it’s ideal, but I believe it’s important to recognize.

Will, would you mind sharing your mastery level plan in more detail? I am interested in switching to standards based grading and it sounds like what you are doing may be helpful. thank you

[I’m going to copy/paste/modify a response I gave to a bigger question I recieved yesterday.]

(Note: I’m still super new into this. I wouldn’t call myself an expert at all. Below is what I’ve tried, but I’ll be changing some of it.) For the sake of ease, and at the risk of sounding self-important, I’m going to mark topics that can be explored into deeper depths on their own right. I’m going to call them Cans of Worms (or, CoWs), because, frankly, that amuses me.)

The first thing I did was make a list of concrete Learning Targets (CoW 1) for the students (“I can…[list some action the student takes].”). Example: “I can identify square, rectangle, triangle, and pentagon.” The core of these should be built against your standards.

I set up lessons so students are given the opportunities to learn and develop the things my LT list identifies. As they progress, I assess them against the LTs. My students do a lot of work in the classroom, none of which I grade or is for points (my least favorite word). Instead, I use it to give feedback/feed forward (Cow 2). It’s an attempt to prompt reflection and provide the beginning of a roadmap forward for them.

After students have begun to show signs of having mastered an LT to a deep level, I formatively assess with a quiz I’ve drafted (many of us call them “Show Me’s” or something similar to avoid words that trigger anxiety)

(CoW 3), and rate them on a scale of mastery (CoW 4), not points out of points.

Right now, my mastery scale is set up 1 – 4 (Briefly summarized: 1-Basic concepts missing., 2-Signs of of learning emerging. Misconceptions or missing knowledge present., 3-Serviceable content knowledge and proficient application., 4-Complete content knowledge with almost no application mastery.) The definitions of these are on my wall.

Students have as many attempts to convince me of their mastery level throughout the semester for each LT. I do this as an attempt t reinforce that learning to reach a goal is more important than the grade. The points associated with each ranking obfuscates this, thus the first change I mention below.

What I might do differently:

-Eliminate the number ranking system going instead to “Proficient”, “Not Yet Proficient”, and “Not Attempted”. Final score in the class would then be based on how many of each exist for the student.

-Instead of giving formal quizzes for each LT, identify what “Proficient” means (maybe via a 1-point Rubric? – CoW 5), and then let students present anything they think meets that criteria for each LT. I really want to put them in control of the decision making that way. I want them asking, “Does this meet the criteria,” rather than me defining that bar and robbing them of the metacognitive development.

-Do more self-assessment and peer-assessment (CoW 6) along the way before a formal submission of evidence to me for the ranking of mastery.

I would love for this to be a dialogue, so please feel free to keep the conversation going! I think I need to start a blog so I have a repository for forms and rationales. We’re at our best working together.

Also, check out @KentWire, @RickWormeli2, @MarzanoResearch, and @andburnett123. They all play different roles in education, and have been invaluable to me with respect to assessment.

Thank you!

I love this! Thank you!

I’m curious as to what you use for a summative assessment?Mar

What are your thoughts on peer scoring?

I don’t have much to say other than I can see it being useful if peers provide feedback that can be used to improve each other.

Thanks, Robert, for sharing your ideas in such a transparent way.

Re – subjectivity of grading via a rubric. ALL grading is subjective. For example, a multiple choice exam which seems “objective” is indeed subjective in that the test writer exercised subjective discretion in choosing certain items to include and others to omit. There is no such thing as an objective evaluation.

At the same time, it’s important that evaluation is not arbitrary or capricious. Thus, a clear rubric focused on attributes that have been addressed during instruction, written in student-friendly language, and illustrated by exemplars (i.e., samples of work at varying levels of competence) help to make performance expectations/success criteria for students transparent and teachers’ evaluations of student work more defensible. Calibration by various raters to increase inter-rater reliability is critical too. This approach is supported by the assessment literature.

Great point Kim. I hadn’t thought about subjectivity in that way, but now that you mention it, it’s clear that you’re right. It certainly is hard (or impossible) to eliminate biases from the work a person does.